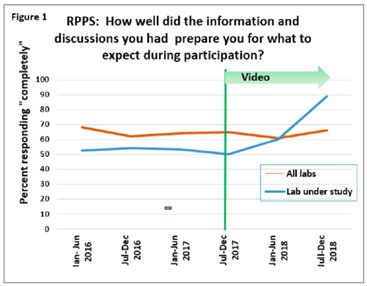

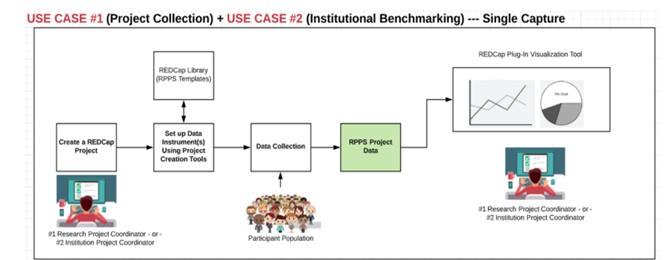

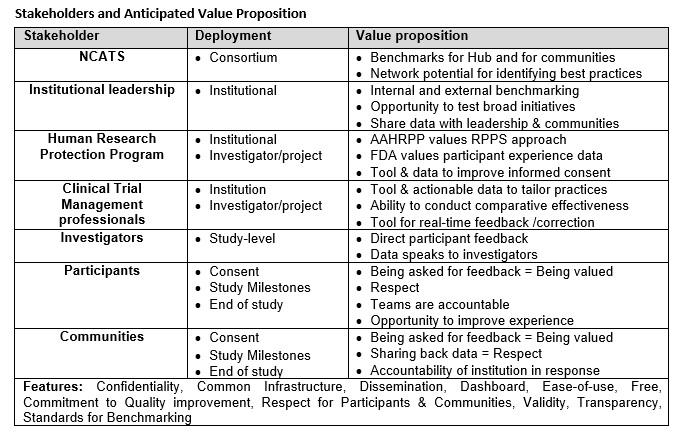

In May, the National Center for Accelerating Clinical Translational Science (NCATS) granted a $2.7 Million award to Rockefeller University to develop new infrastructure facilitating collection of research participant feedback for widespread adoption: “Empowering the Participant Voice: Collaborative Infrastructure and Validated Tools for Collecting Participant Feedback to Improve the Clinical Research Enterprise (PAR-19-099, 1-U01- TR003206). Principal investigator Dr. Rhonda G. Kost, Associate Professor in the Center for Clinical Translational Science (CCTS), will lead a 6-site collaboration to leverage the Rockefeller-developed Research Participant Perception Survey (RPPS) into a new low-friction informatics and analysis platform to support rapid collection of actionable participant feedback. Dr. Roger Vaughan, head of biostatistics at the Rockefeller CCTS, will collaborate on analysis methods and program evaluation. Collaborating CTSA hubs for this award are Vanderbilt University, Johns Hopkins, Duke, University of Rochester, and Wake Forest University. Why survey participants? Partnering with patients and research participants, including those from communities disproportionately affected by health disparities, is essential to conducting clinical translational research. Recruitment, retention, and representative sampling remain major challenges to research. Assessing participants’ research experiences provides essential outcome data for improving the clinical research enterprise, yet those experiences go largely unmeasured. The paucity of direct participant experience data to support evaluation of research practices is a critical translational gap. This grant is directed at addressing this crucial need to improve clinical investigation. To execute the project aims, the teams will collaborate in Year 1 to develop integrated RPPS/REDCap tools and dashboard and analytics modules, as wells as formalize the implementation framework to afford institutions flexibility in their specific deployments of demonstration cases, while preserving common elements and standards for future data integration and benchmarking. In Years 2 and 3, sites will implement use cases – study-level surveys, departmental aggregation of project surveys, and institutional level surveys – among different targeted populations and using various outreach platforms to demonstrate the usability and value of the infrastructure and make refinements. Key deliverables are the examples of actionable findings gleaned from participant feedback that institutions then use to drive measurable improvements to research experiences. In Years 3 and 4, the team will actively disseminate the infrastructure across the REDCap user community and CTSA Consortium hubs. The value proposition for different for different stakeholders – investigators, clinical research managers, department and institutional leadership, and NCATS – will be refined in the path to widespread use. A key element of deliverable in dissemination is to gain the critical mass of users to support valid inter-institutional benchmarking. Valid tools, good intentions, and persisting barriers Through a series of multi-site projects from 2010-2018, Dr. Kost led the development of a suite of validated Research Participant Experience Survey (RPPS) tools in collaboration with investigators at 15 NIH funded academic health centers. The RPPS collects actionable data about study conduct, informed consent, trust, respect, education and communication. The RPPS tools - available in English and Spanish and in long, short and ultrashort versions - have been in use to drive improvements in research at Rockefeller since 2013, at Johns Hopkins University since 2017, and in some form at up to 16 CTSA hubs in total. Requirements for infrastructure to support implementation, redundant across sites, have stalled uptake and collaboration related to participant outcomes. When collected, participant experience data can provide the evidence base to support the impact of innovative practices. Figure 1 shows the change in one measure of participant experience before and after a team implemented a video designed to enhance informed consent. Figure 1 shows the responses to the RPPS question “How well did the discussions and information provided before the study prepare you for your research experience?” The frequency of the response “completely” from participants in this study (blue), was 50-53% in the preceding years (2015-2017), but rose to 75% in the year after the new video (green) came into use in late 2017. By comparison, the response scores for the same question for all studies at Rockefeller (orange) were unchanged in the same time frame. While limited by retrospective design and sample size, this example illustrates the kind of impact data that could be collected to via a streamlined RPPS/REDCap platform. Despite the availability of the valid RPPS, institutions report persisting barriers to broad implementation, pointing to the need for a ready-to-use platform, standardized approach, low-cost institutional infrastructure, and plug-and-play visualization and analysis tools to streamline collection of participant feedback. Leveraging a platform in wide adoption REDCap(https://projectredcap.org) is a secure, web-based application with workflow and software designed exclusively to support rapid development and deployment of data capture tools for research studies, with minimal training of the user. REDCap has security and usability features specifically designed to support participant-facing surveys and flexible deployment strategies. REDCap was developed at Vanderbilt by project collaborator Dr. Paul Harris, and has been used by over 970,000 users at more than 3,500 institutions to support more than 725,000 research projects. Virtually all of the CTSAs use REDCap. The REDCap Shared Data Instrument Library (SDIL) makes validated data collection instruments available to researchers , and supports technologies such as web services that allow integrated data collection through other platforms. When complete, the integrated RPPS/REDCap tools will be made available for direct download through the SDIL. A preliminary map of the data flow for a project level or institutional level survey, without inter-institutional integration is shown below. Defining Success With many deliverables along the way, both process-oriented and tangible, the ultimate measures of the success of the project will be: 1) the production of an Implementation Guide, RPPS/REDCap project tools, and infrastructure ; 2) outcome data from completed demonstration projects at the sites that illustrate the utility of the RPPS/REDCap to collect representative feedback from participant populations, and produce actionable findings to generate impactful improvements; and 3) embrace of the value proposition of RPPS/REDCap infrastructure evidenced by an expanded set of users, including REDCap users and institutional leadership, and participation in consortium benchmarking. The goal is to provide value to a range of stakeholders to sustain broad uptake and use of participant feedback to improve research. The RPPS/REDCap project is also supported in part by an award to Rockefeller University from the National Center for Accelerating Tranlsational Science, 1U TR0001866. Stakeholders and Anticipated Value Proposition

October 23, 2020

$2.7M Award to Streamline Collecting Participant Feedback and Drive Improvement to the Clinical Research Enterprise

By Rhonda G. Kost, MD